Advertisement

Let’s be honest: working with large language models (LLMs) can feel overwhelming at first. There’s a lot of noise out there, and the learning curve? Not exactly gentle. But once you strip away the jargon and focus on what matters, you’ll realize that mastering LLMs isn’t as out of reach as it seems. You just need to start with the right approach—and stick to it.

So, whether you're curious and looking to get familiar with it or someone with a technical background who is aiming to go deeper, this guide is for you. Let's break it down step by step.

You might think you can skip this, especially if you’ve read a couple of articles or watched a few tutorials. But understanding how LLMs work—really work—is the difference between guessing and knowing.

Start with what an LLM is: a deep learning model trained on a huge amount of text data. It processes language by predicting what comes next in a sequence. That's it. Sounds simple, right? But under the hood are layers of transformers, attention mechanisms, and token embeddings. Don’t worry about memorizing the lingo right away. Focus instead on the core concept: LLMs learn patterns in language and respond based on what they’ve seen before.

Reading about LLMs is one thing. Using them? That’s where things click.

Thanks to open-source projects, you don’t need a supercomputer to try things out. Start small with models like GPT-2 or LLaMA on platforms like Hugging Face. Spin up a notebook, run a few prompts, and observe the outputs. You’ll quickly notice how these models respond, where they shine, and where they fall short.

And here’s a tip: don’t just run sample prompts. Tweak them. Test them. Feed the model something weird. You’ll learn more from failed outputs than polished ones.

Once you’ve gotten your feet wet with pre-trained models, you might be tempted to jump straight into fine-tuning. And that’s great—but take a breath first.

Fine-tuning is about giving a model a nudge in a certain direction. Maybe you want it to write product descriptions, or maybe you want it to answer support tickets. Whatever the case, keep your dataset small and clean. Use frameworks like LoRA or PEFT to keep the process lightweight.

And don’t chase perfection. The goal isn’t to create the smartest model ever—it’s to make one that fits your task better than the base version.

Here’s the part people overlook all the time: prompts are everything.

You can spend days fine-tuning a model and still get lackluster results if your prompts are clumsy. Prompt engineering is about crafting input that makes sense to the model. Be specific, be structured, and most importantly, test variations.

Try changing the tone. Use bullet points. Rephrase your instructions. Even something as minor as punctuation can affect the output.

Prompt engineering isn’t a one-time step—it’s something you refine constantly. The more time you spend here, the more control you’ll gain over how the model behaves.

One of the biggest mistakes people make is assuming a model is “good” based on gut feeling.

You need metrics. For text generation, that might be BLEU, ROUGE, or perplexity. For classification, it could be accuracy, F1 score, or recall. But here’s the thing: numbers alone aren’t the full story.

Pair your metrics with human judgment. If the model is generating content for people, you need real eyes on those outputs. Ask for feedback. Compare versions side-by-side. The balance between objective scores and subjective quality is what separates average developers from great ones.

LLMs are evolving fast. Every few months, there’s a new paper, a new model, or a new approach. It’s tempting to chase every update, but you don’t have to. Pick a few trusted sources—maybe a newsletter, a GitHub repo, or a researcher you follow on social platforms. Stick to those. Learn deeply rather than chase breadth. When you're grounded in the fundamentals, new tools are easier to understand because you know where they fit. Don’t worry about being the first to try everything. Focus on mastering what’s already proven useful.

At some point, you have to stop reading and start building.

Maybe it’s a chatbot. Maybe it’s a smart summarizer for your emails. Maybe it’s just a script that helps write blog outlines. Whatever it is, make it real. Projects are how everything comes together: model understanding, fine-tuning, prompt engineering, evaluation—it all lands when you apply it to something concrete. And you’ll learn more from launching something simple than trying to perfect something complex. Start with what you know. Add LLM capabilities to tools you already use. Over time, your projects will grow. So will your confidence.

You stop feeling like you're on the outside looking in. Suddenly, terms like "token embeddings" and "zero-shot learning" aren’t intimidating—they’re part of your vocabulary. More importantly, you stop relying on others to explain what LLMs can do. You figure it out for yourself.

And that’s the goal here: to move from observer to practitioner. Not because it looks good on a résumé. However, the ability to understand and use language models is becoming as essential as knowing how to code or manage data.

It's not magic. It's just a method.

Mastering LLMs isn’t about mastering everything at once. It’s about knowing what matters—and focusing there.

Begin with the basics. Get your hands dirty. Understand your tools. Don’t overthink fine-tuning. Learn how to write good prompts. Trust real feedback. And build things that solve actual problems. When you look back, what’ll surprise you most is how quickly it all started to make sense. No fluff. No shortcuts. Just clear steps, consistent effort, and a willingness to learn by doing.

Advertisement

How to use the Nightshade AI tool to protect your digital artwork from being used by generative AI models without your permission. Keep your art safe online

Curious about AdaHessian and how it compares to Adam? Discover how this second-order optimizer can improve deep learning performance with better generalization and stability

Need to merge tables in SQL but not sure which method fits best? This guide breaks down 11 practical ways to combine tables, making it easier to get the exact results you need without any confusion

Confused between Git reset and revert? Learn the real difference, when to use each, and how to safely undo mistakes in your projects without breaking anything

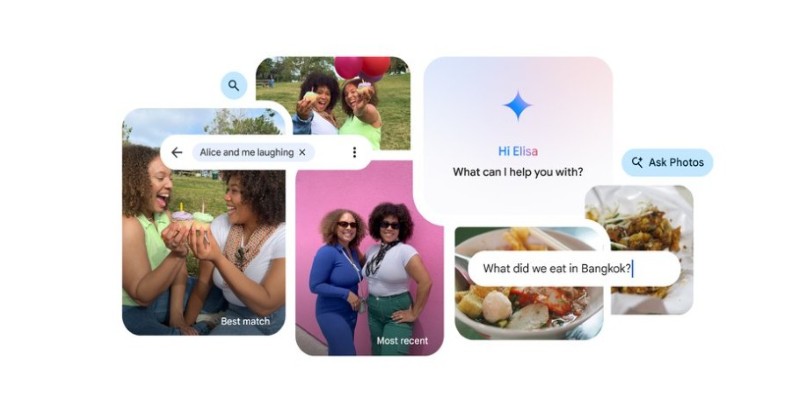

Ever wish your photo app could just understand what you meant? Discover how Google’s ‘Ask Photos’ lets you search memories using natural questions and context

Applying to the Big 4? Learn how Overleaf and ChatGPT help you build a resume that passes ATS filters and impresses recruiters at Deloitte, PwC, EY, and KPMG

Ever wondered how you can make money using AI? Explore how content creators, freelancers, and small business owners are generating income with AI tools today

Heard about Grok but not sure what it does or why it’s different? Find out how much it costs, who can use it, and whether this edgy AI chatbot is the right fit for you

Learn why exploding interest in GenAI makes AI governance more important than ever before.

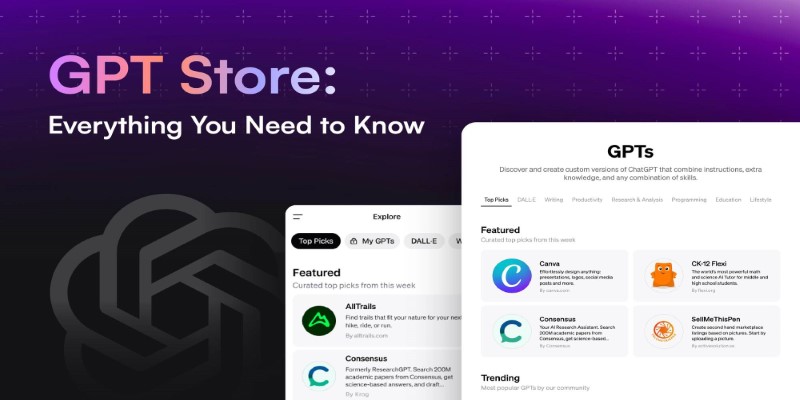

Looking to explore custom chatbots tailored to your needs? Discover how to access and use OpenAI's GPT Store to enhance your ChatGPT experience with specialized GPTs.

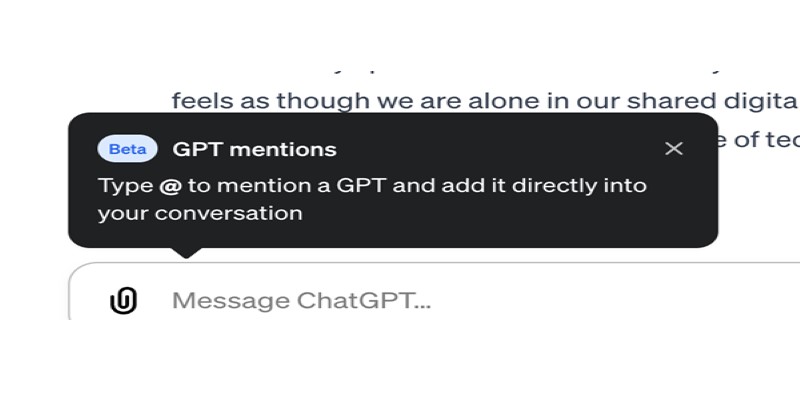

Curious about how to bring custom GPTs into your ChatGPT conversations with just a mention? Learn how GPT Mentions work and how you can easily include custom GPTs in any chat for smoother interactions

Struggling to keep up with social media content? These AI tools can help you write better, plan faster, and stay consistent without feeling overwhelmed